HPC vs cloud

Both HPC and cloud computing share a common remote execution model, where machines are operated by a third party in large data centers, a service provider (cloud provider or HPC supercomputer center) which have multiple clients. Service providers have a large, remote resource pool, that users can dynamically allocate according to their needs. This also means storing and accessing data and programs/software over the Internet and that the client does not have to worry about expensive hardware and software upgrades to scale and rely on service providers resources.

In these post we are focusing on one of the cloud models, the one more related to HPC, Infrastructure-as-a-Service (IaaS).

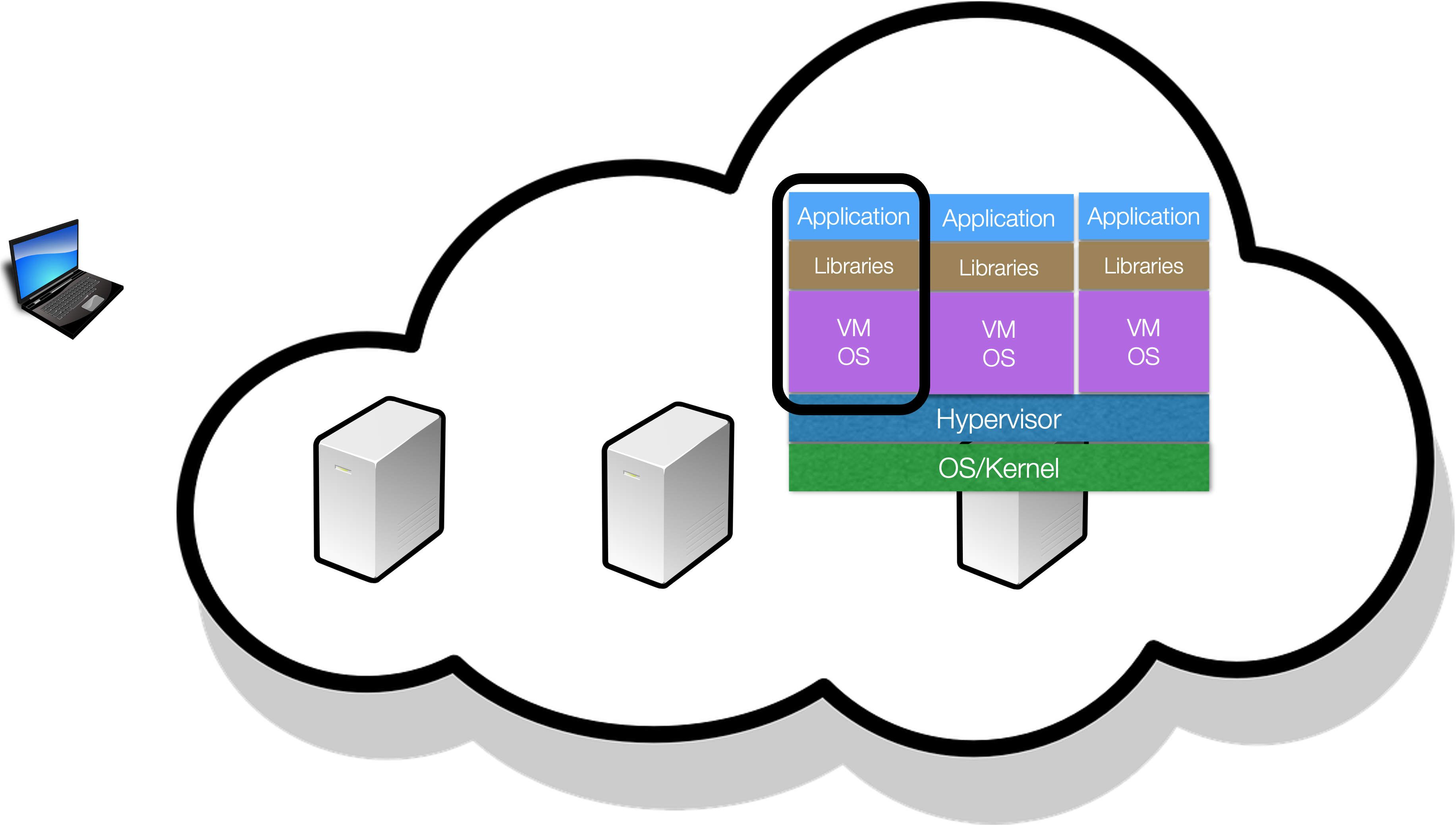

In this model, a client rents the servers and storage they need from a cloud provider, trough virtualization and then use that cloud infrastructure to build their applications. Resources are allocated on demand and in a pay-per-use fashion. Virtualization allows creating a software-based virtual device or resource that, in practice, is an abstraction provided on top of existing hardware or software resources. Examples of IaaS for computing are Amazon EC2, Google Cloud Compute Engine or Microsoft Azure Cloud Compute.

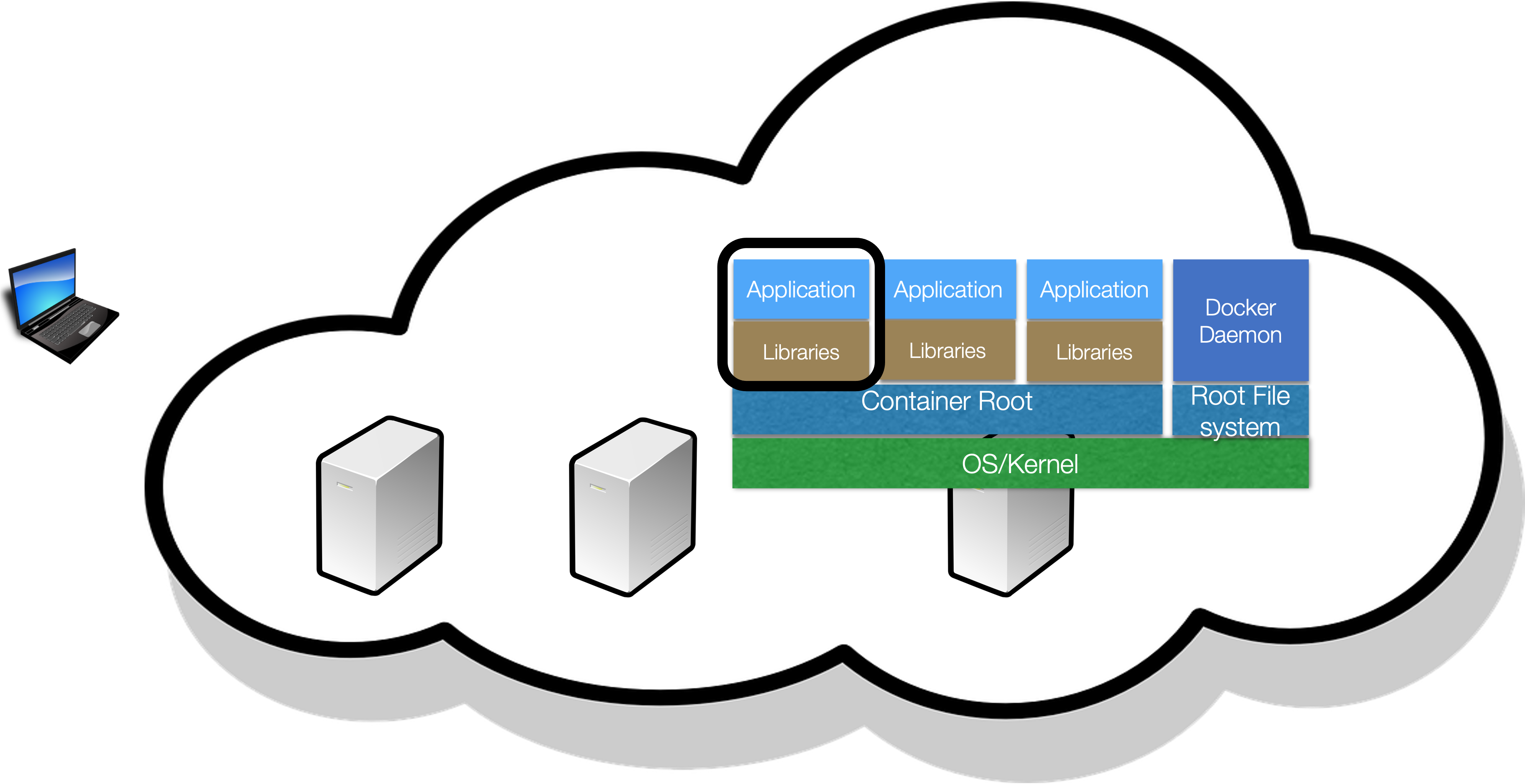

Nowadays a common model of accessing resources is also through containers, such as Docker a lightweight virtual environment that groups and isolates a set of processes and resources (memory, CPU, disk, …), from the host and any other containers

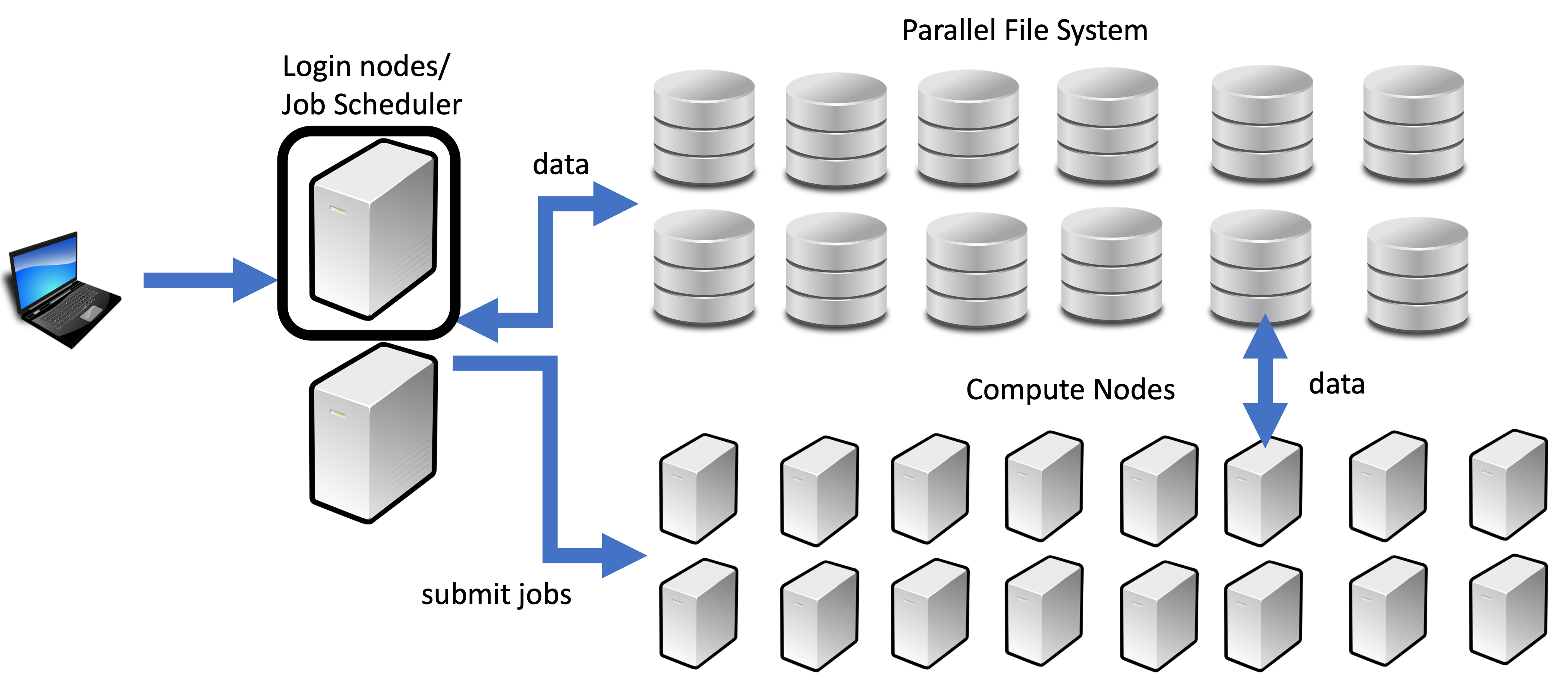

High performance computing (HPC) is used to solve complex, performance-intensive problems, being able to process data and perform complex calculations at high speeds taking advantage of multiple processors in a machine and multiple machines connected through a high speed network. HPC can be achieved through parallel processing, where multiple nodes (sometimes thousands) work in tandem to complete a task. These tasks typically involve analyzing and manipulating data.

Similar to cloud computing, HPC have three types of resources: compute, network and storage. Compute nodes and storage nodes are interconnected through a high speed network. Together, these components operate seamlessly to complete a diverse set of tasks.

An HPC system might have thousands of compute nodes and users. The job scheduler manages which jobs run where and when enabling users to submit, manage, monitor, and control jobs. Jobs submitted to the scheduler are queued, then run on the compute nodes. The scheduler has three main functions:

- It allocates the resources to users over a period of time so they can perform work.

- It provides a framework for starting, executing, and managing work on the set of allocated nodes.

- It controls contention for resources for managing a queue of pending work.

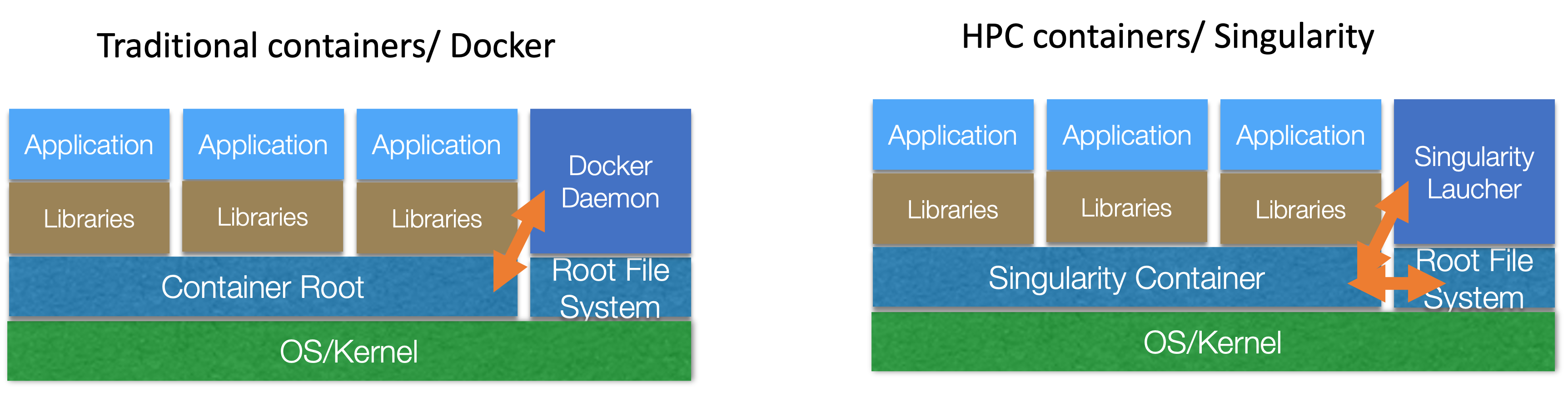

Similar to cloud computing, it is also possible to run containers in HPC systems, Apptainer/Singularity or Charliecloud. Contrary to cloud, in HPC containers run as user, without a daemon, providing easier integration than cloud container platforms that are designed for full isolation. Similar to cloud they share host services and features and provide easy access to devices like GPUs or high speed network.

The images used in these post are based on the slides from João Paulo’s lecture on Cloud Computing Services and Applications from the MIEI course in Informatics Engineering, U. Minho.

Enjoy Reading This Article?

Here are some more articles you might like to read next: