Benchmarking performance and energy efficiency of Python dataframes frameworks

Dataframes are packages in scripting languages with data analytics algorithms that mimic relational algebra, linear algebra, and spreadsheet computation. Regarding relational operations they provide filtering rows based on conditions, joining data frames, and aggregating column values based on a key column, … Moreover they usually provide advanced analytics operations (e.g. moving averages) and are tightly integrated with the underlying array system to support array computations.

Dataframes are tolerant of unknown data structure and well-suited to developer and data scientist workflows, with variables as columns and observations as rows.

Characteristics such as these have helped dataframes become incredibly popular for data exploration and data science. Particularly, the dataframes abstraction provided by pandas within Python has been downloaded hundreds of million times. However, most dataframes frameworks face performance issues even on moderately large datasets and in particular, pandas has several problems due to being single thread and computations being done in memory.

In these post I will focus on benchmarking dataframes packages with Python pandas alike interface and evaluate both the performance and energy efficiency of several frameworks. Some of them support out-of-core operations (process tabular data larger than available RAM) and some of the supported frameworks also have distributed computing support but for the current post I focus on single node results, with different dataset sizes.

The experiments were done on a machine with Kernel CentOS Linux 7.8, kernel 3.10, 32GB of RAM, 80 Intel(R) Xeon E5-2698 @ 2.20GHz CPU cores and an HDD disk. The evaluated frameworks were: Pandas,Bodo, Vaex, Intel Distribution of Modin, Dask, Koalas, Polars and Cylon.

The full code to reproduce this experiments and more details can be found in .

Briefly, the benchmark uses the data from the Yellow Taxi Trip Records from NYC TLC Trip Record Data, and after loading the data from a CSV or Parquet file realize the following operations: join, mean, sum, groupby, multiple_groupby, and unique_rows. Some operations are not yet supported (either due to lack of documentation off being unable to make them work with crashing) on all frameworks. The operations were measured with/without filter operations.

For example the current Pandas implementation of the operations is:

class Pandas:

def load(self, file_path):

self.df = pd.read_parquet(file_path+".parquet")

def filter(self):

self.df = self.df[(self.df.tip_amount >= 1) & (self.df.tip_amount <= 5)]

def mean(self):

return self.df.passenger_count.mean()

def sum(self):

return self.df.fare_amount+self.df.extra+self.df.mta_tax+self.df.tip_amount+self.df.tolls_amount+self.df.improvement_surcharge

def unique_rows(self):

return self.df.VendorID.value_counts()

def groupby(self):

return self.df.groupby("passenger_count").tip_amount.mean()

def multiple_groupby(self):

return self.df.groupby(["passenger_count", "payment_type"]).tip_amount.mean()

def join(self):

payments = pd.DataFrame({'payments': ['Credit Card', 'Cash', 'No Charge', 'Dispute', 'Unknown', 'Voided trip'],'payment_type':[1,2,3,4,5,6]})

return self.df.merge(payments, left_on='payment_type', right_on='payment_type',right_index=True)

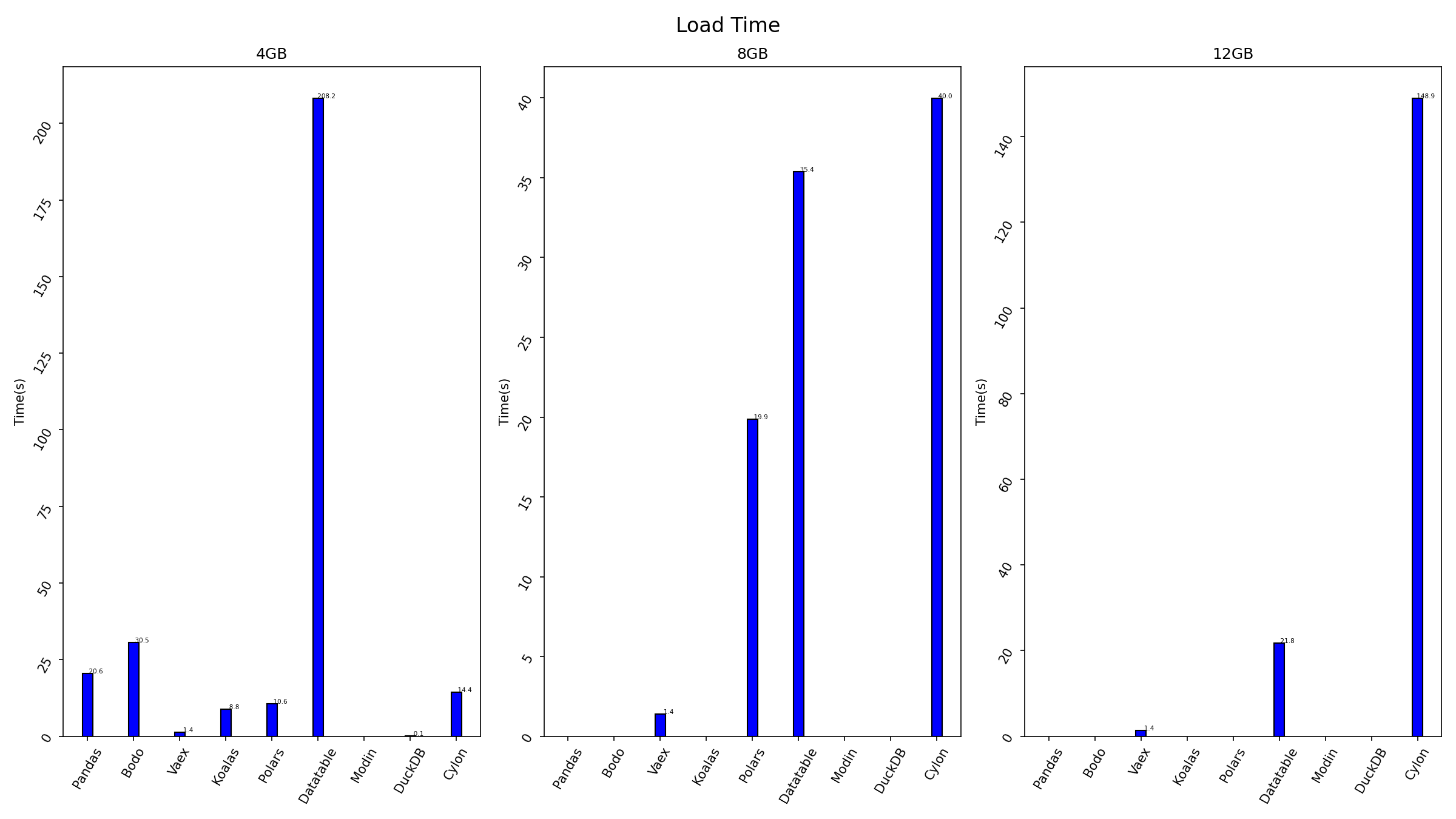

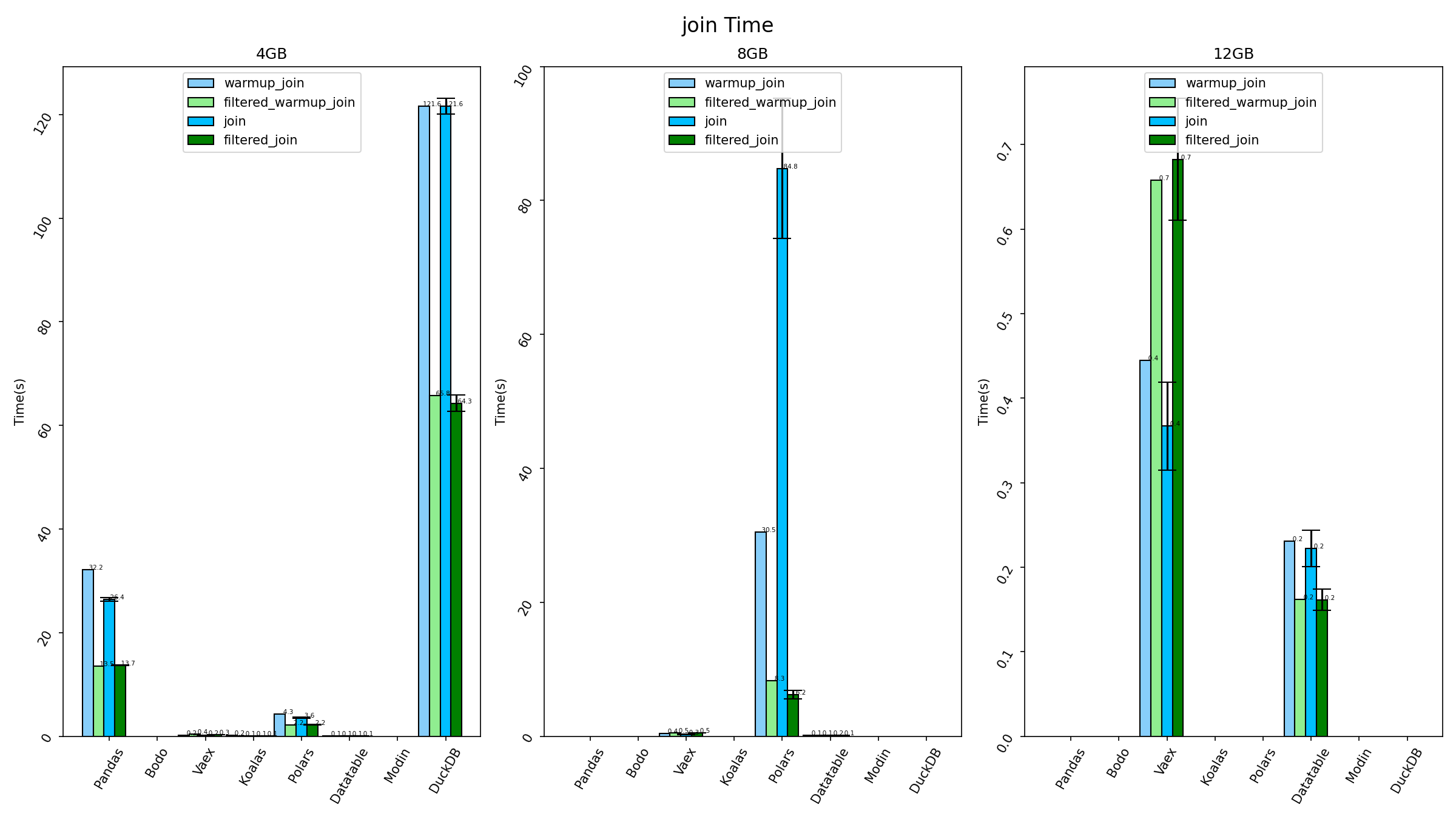

All figures bellow show the results for three different data set sizes. The missing results for a given framework are either due to the operation not being supported or the framework not able to load the dataset size. For 8GB dataset only Vaex, Polars, Datatable and Cylon were able to load data. And for 12GB Polars were also unable.

The following figure shows the load time.

Vaex presents the smallest load time for almost dataset sizes and constant as data is read directly from disk as needed.

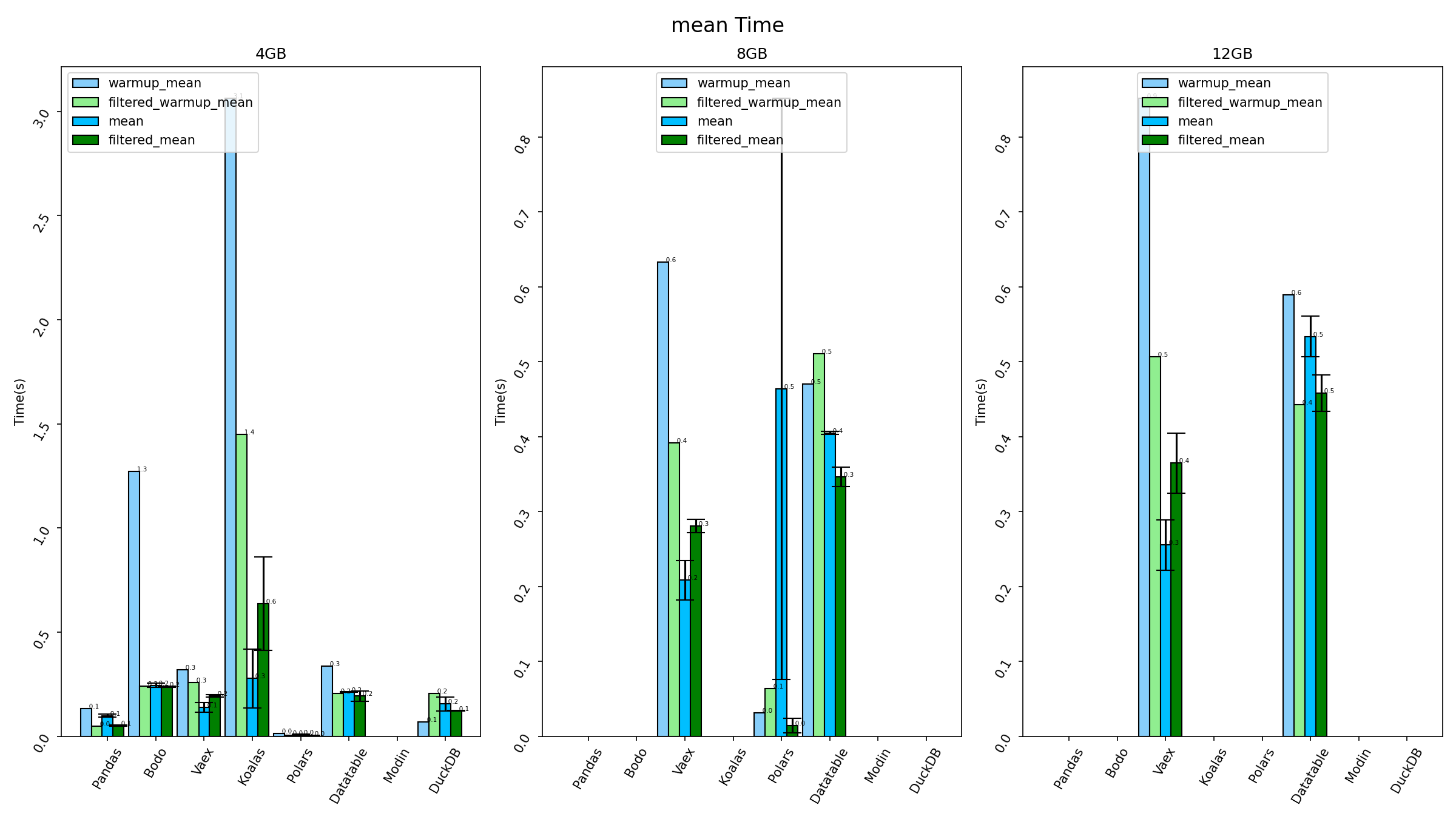

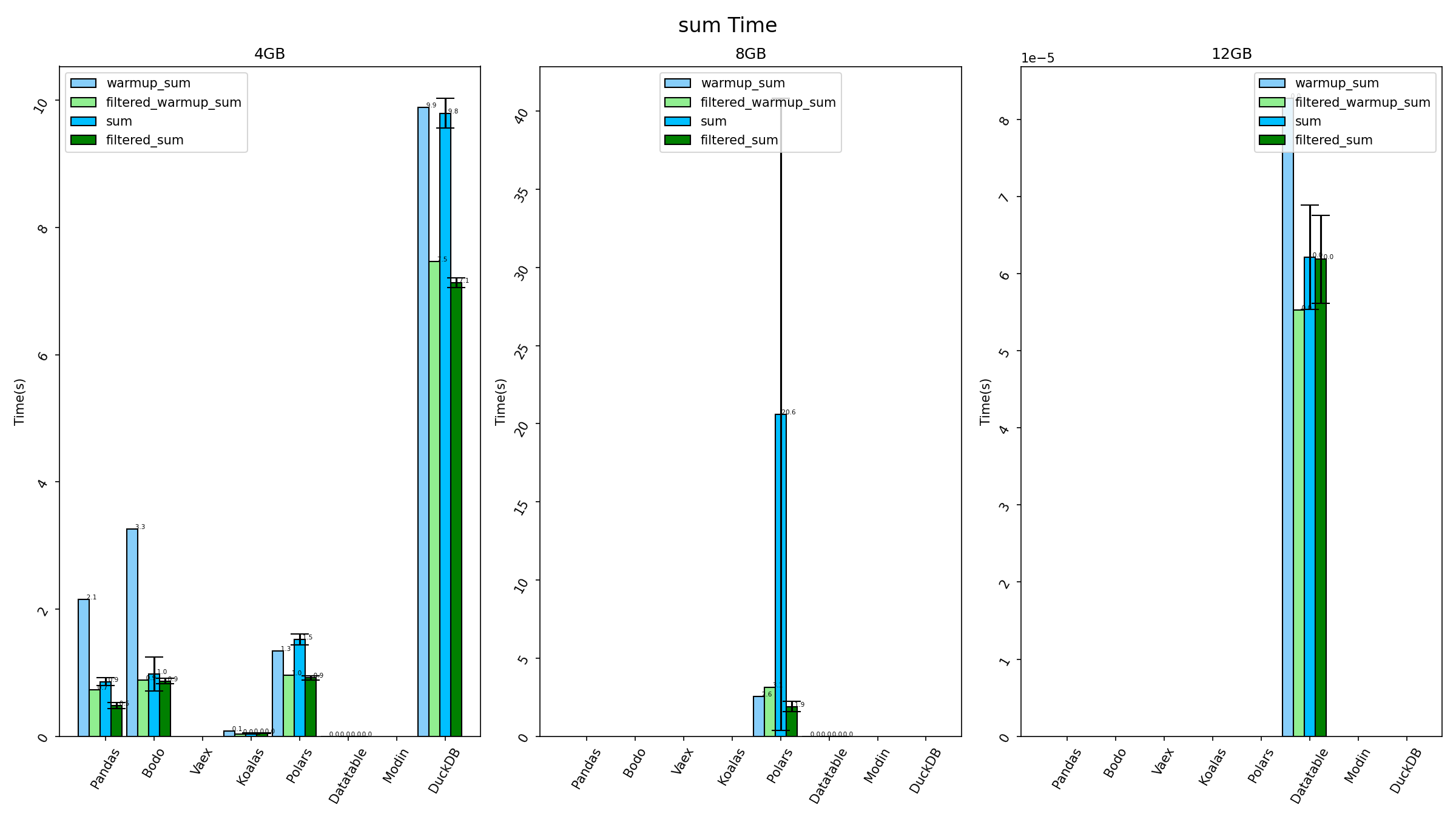

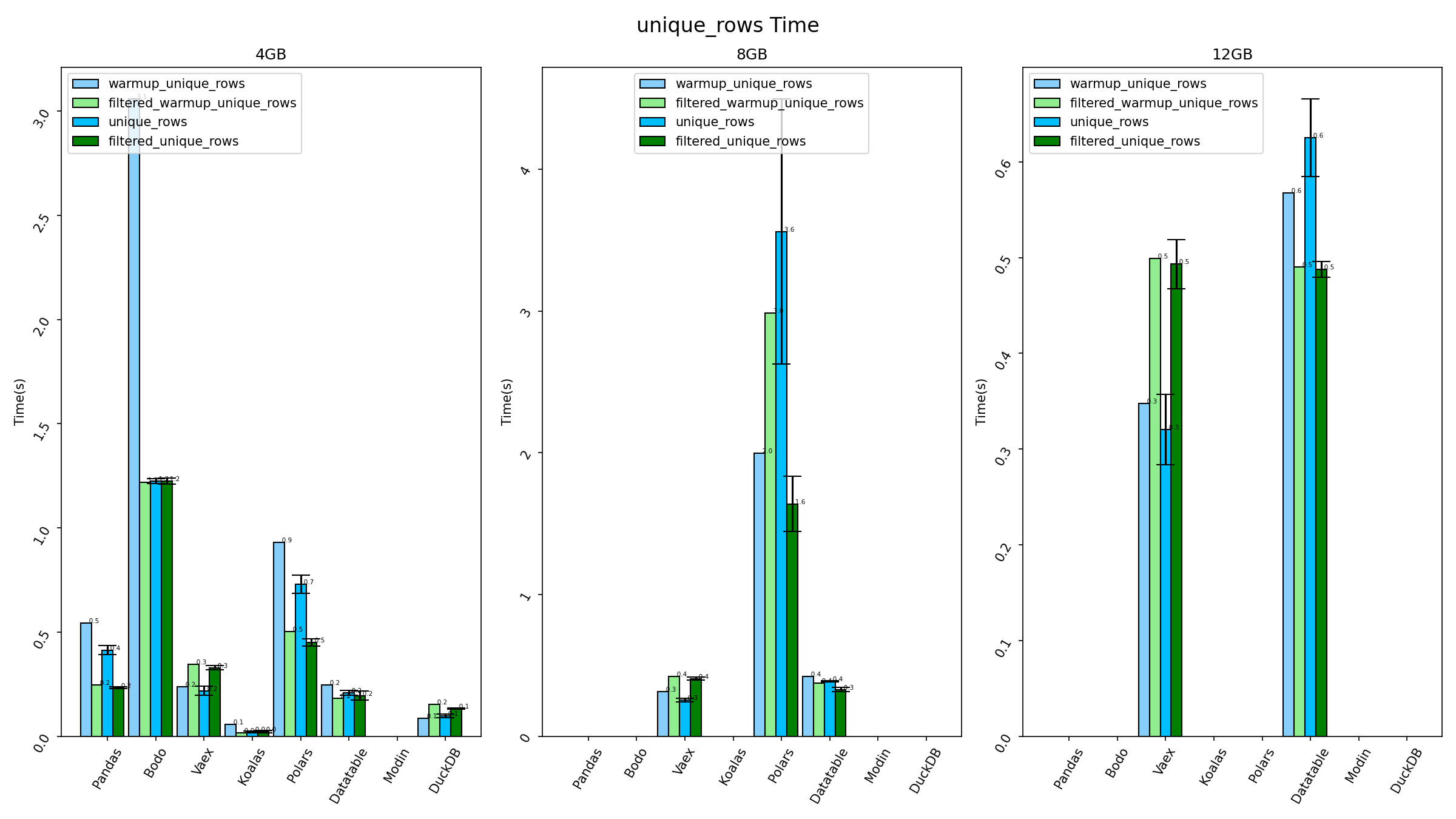

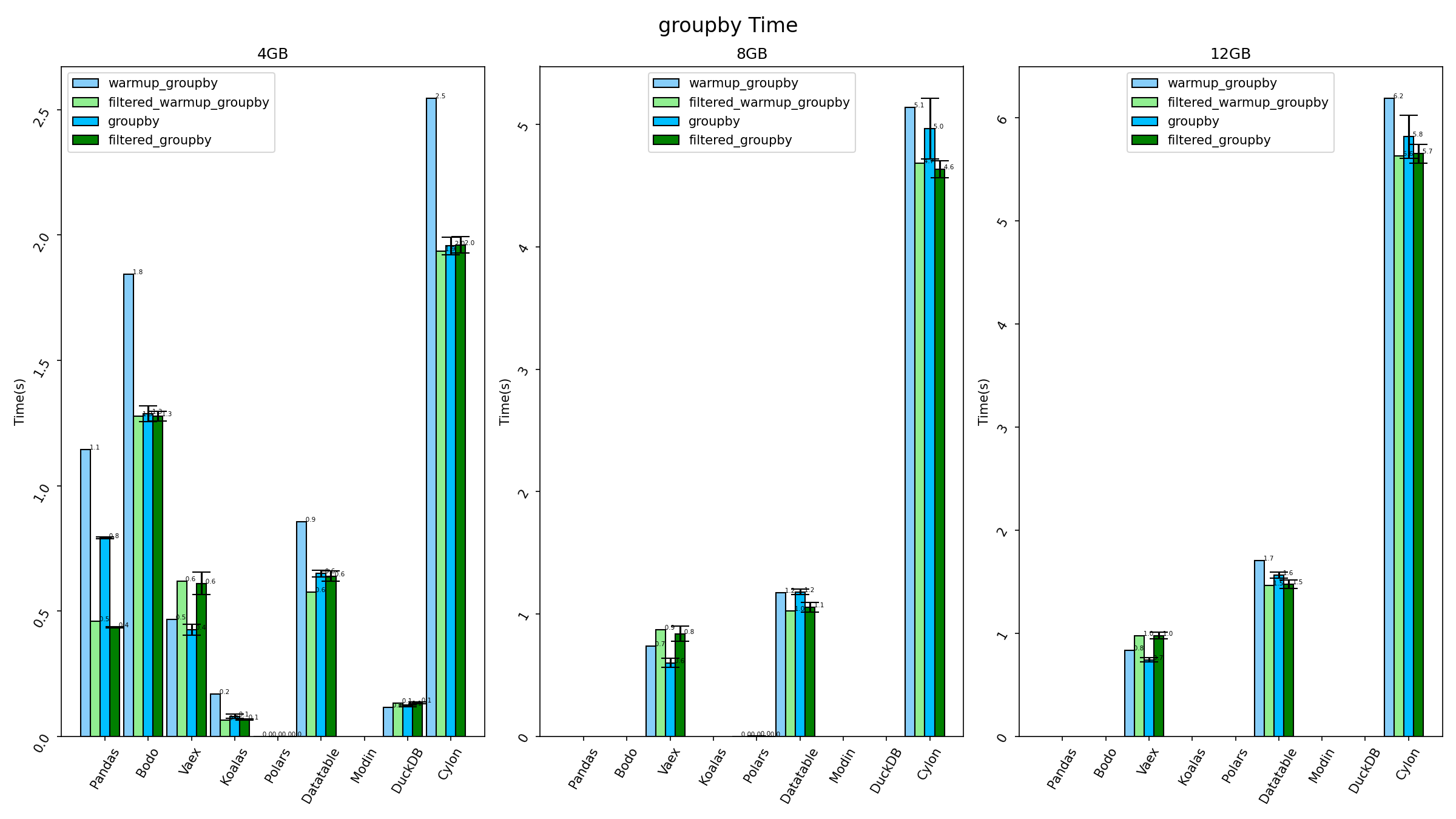

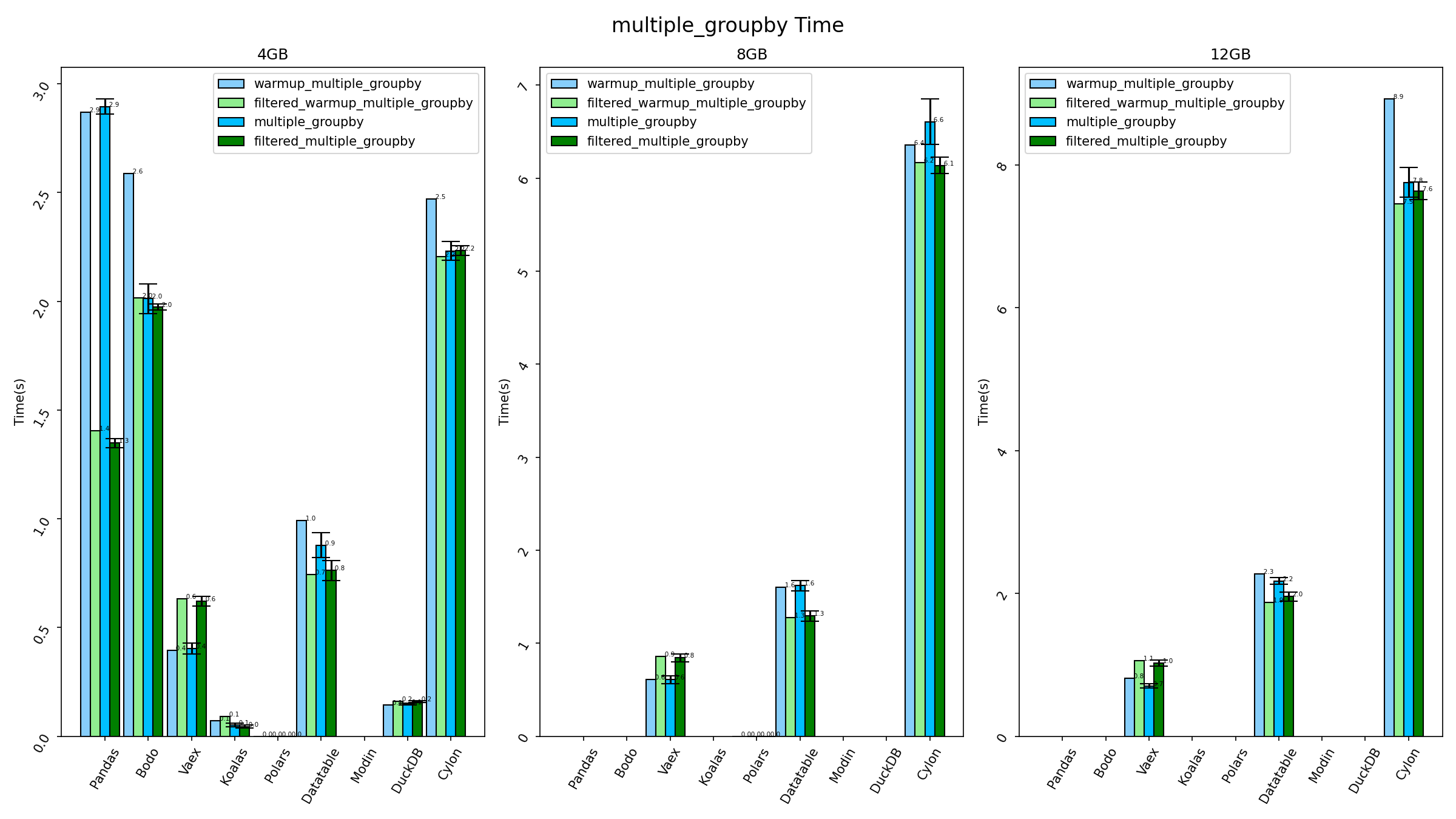

Each operation is run several times and the figures show the time for the first run (warmup), both with and without filter, and then the mean and standard deviation of other runs.

The following figure shows the latency for the mean operation.

This is a simple figure and all frameworks are able to execute within a few seconds with Bodo and Koalas taking more time, particularly, for the warmup operation, respectively due to the compile and setup costs.

The following figure shows the latency for the sum operation.

The following figure shows the latency for the unique rows operation.

The following figure shows the latency for the group by operation.

The following figure shows the latency for the multiple column group by operation.

The following figure shows the latency for the join operation.

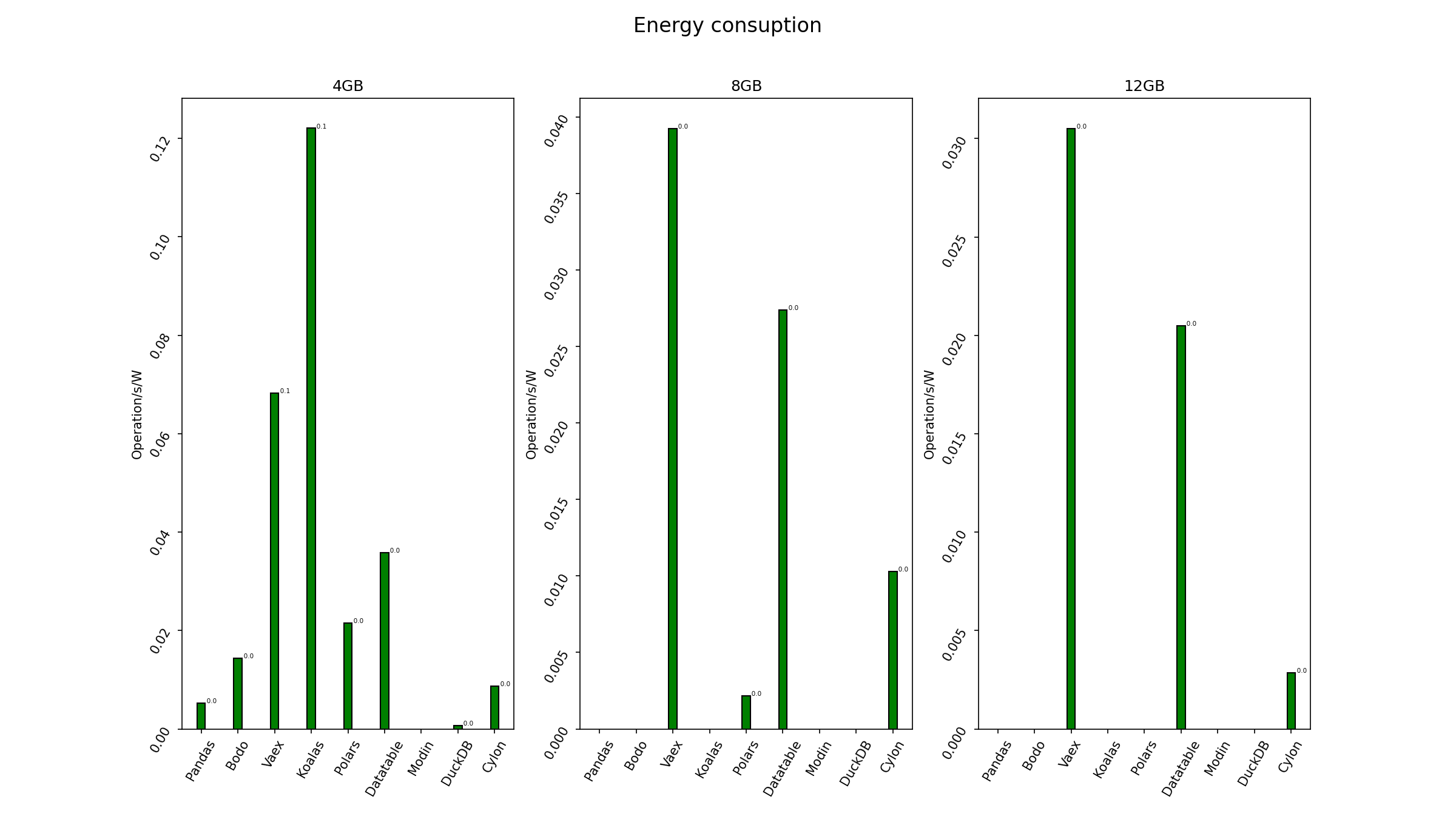

Additionally, the energy consumption of each framework during the load and execution of operation is measure using PowerJoular and the following figure number of operations executed per second per consumed watt.

As the energy consumption of the different frameworks don’t differ a lot, the one with best debit per watt are the ones with best performance and thus Vaex, Koalas, and Datatable yield the best results.

As a summary the results show that most frameworks were unable to load dataset larger than 4GB and that for these operations the energy efficiency of the frameworks is highly related with their performance. Vaex, Koalas and Datatable are the frameworks with best performance for the small dataset but Koalas (with default configurations) is unable to scale to large datasets.

Enjoy Reading This Article?

Here are some more articles you might like to read next: